The University of Electro-Communications (UEC) in Tokyo, Japan, has released details of its latest research into radar recognition of humans for autonomous driving applications.

The research found that radar-based sensors have emerged as an essential component of driver assistance systems and self-driving vehicles, as they can robustly distinguish nearby pedestrians and other traffic-relevant objects. However, in addition to being applicable in bad weather, artificial recognition systems need to be capable of dealing with so-called non-line-of-sight (NLOS) situations, when the line of sight between detector and object is obstructed. In traffic, NLOS situations occur when pedestrians are blocked from sight; for example, a child behind a parked car, about to run suddenly into the street.

Associate professor Shouhei Kidera and his research team at UEC claim to have developed a radar-based detection method for recognizing humans in NLOS situations. The scheme is based on reflection and diffraction signal analysis and machine learning techniques.

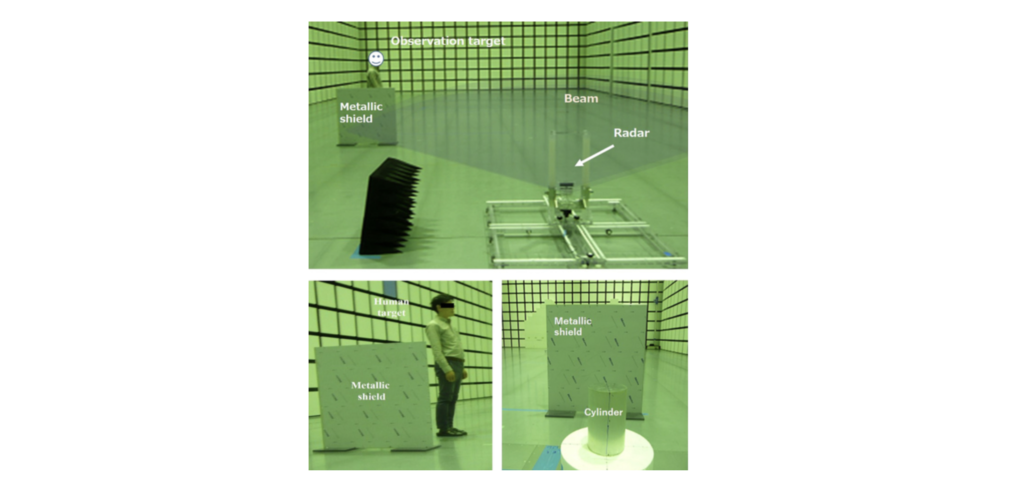

The researchers performed radar experiments in an anechoic chamber, with a metallic plate placed in the chamber so that an NLOS situation arose when a target object moved behind the plate from the radar’s point of view. A 24GHz radar and two target objects were used in the experiments: a 30cm-long metallic cylinder and a human wearing light-colored clothes. Three regimes were investigated: complete NLOS, partially NLOS (target object positioned at the border between the NLOS and the LOS zone) and complete LOS. The signals received by the detector were intrinsically different for the metallic cylinder and the human. Even if a human stands still, breathing and small movements related to posture control cause changes in the reflected wave signals. The scientists found that the differences are enhanced by diffraction effects: the ‘bending’ of waves around the edges of the metallic plate.

The researchers applied a machine-learning algorithm to the reflection and diffraction signals to let their sensing device learn the difference between a human and a non-human object. A recognition rate of up to 80% was achieved. They also performed experiments with an actual car as the shielding object, which led to similar results and additional understanding of the dependence of the recognition success rate on the radar’s position relative to the target. Also, by carrying out additional experiments with a human performing a stepping motion, the scientists were able to recognize whether a human is standing still or walking, even in complete NLOS situations.

The results, remarked Kidera, signify an important step forward toward feasible self-driving car technology, though he noted that “there should be further investigation using other classifiers or features, which is our important future work”.

The full paper can be found here.