Three decades after their introduction, supercomputers provide ever more powerful, reliable and time-saving virtual simulations. Automotive Testing Technology International takes a look at the technology and its growing influence on the crash testing process.

The ever-increasing power of supercomputers is making virtual crash test simulations more predictable. Designed to do multiple highly complex calculations simultaneously, supercomputer hardware has evolved over three decades to the point where a typical OEM system has 256 cores. “Development is mind-boggling,” says Uwe Schramm, chief technical officer at Altair. “We now have nodes with 192 cores.” And rises in computing power naturally enable more intricate simulation detail. “We have finite element models [FEMs] with 10 and 20 million elements,” Schramm notes. “There is more fidelity in the models, but if there are 500 or 1,000 cores, each core must work as hard as the others. The simulation has to be distributed over the cores, and this is not easy for crash simulations because you’re subdividing the model on the different cores.”

Most high-performance computing (HPC) systems run on Linux or Windows, and the use of just two operating systems has simplified the market for software and structural analysis solvers – programs that contain comprehensive material and rupture libraries and solve numerical equations. In addition, improved scalability of massively parallel processing (MPP) versions of code has enabled detailed crash analysis to be completed in hours, while automation has further streamlined the process, with machine learning tools the main driver of refinements.

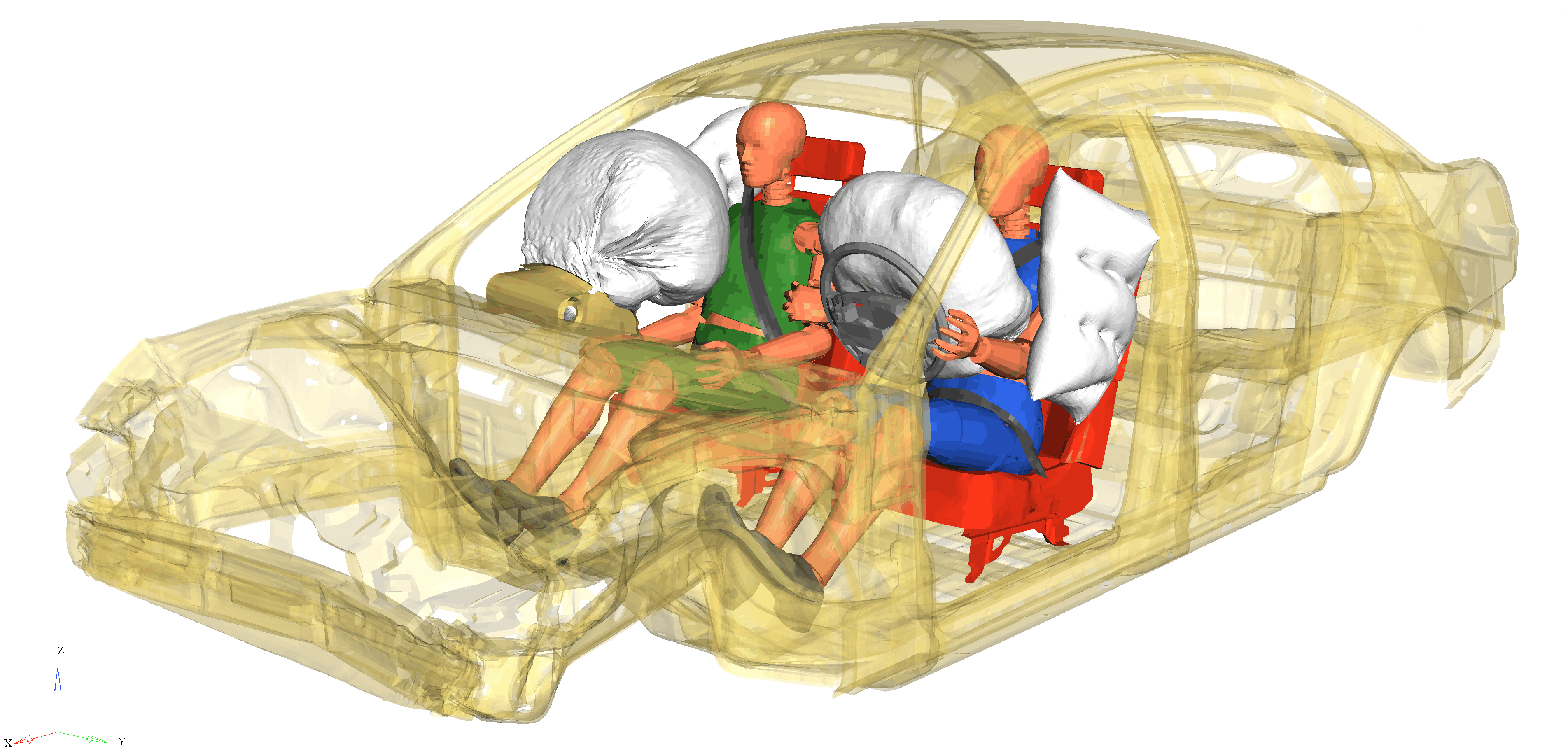

As well as cost and time-to-market savings, supercomputer simulations enable deeper analysis of passive safety systems. “Engineers are able to understand what happens to the structural parts of the vehicle and

how they behave,” says Cristian Jimenez, business development manager of body development at Spanish engineering services company Applus+ Idiada Group. The robustness of the results has also improved. “With simulations, engineers can analyze the vehicle’s performance, changing the values of the boundary conditions. In hours they can understand if the changes have produced a different performance or not.”

Matthew Avery, director of research at Thatcham Research and Euro NCAP board member, believes there is scope for even greater robustness. “We have a catalog of 338 crash types, but in reality there are probably 338,000 configurations. The ability of virtual testing to achieve a notably more robust understanding is great.”

Even eight years ago, the number of crash test simulations carried out in a car’s development cycle was extremely high. “There would often be tens of thousands of crash simulations, but the physical testing is perhaps one-tenth of that,” says Schramm.

During the development of its Kodiak model, Škoda simulated 99% of crash tests. “Simulations offer a relatively deep insight into what happens during a crash,” explains Tomáš Kubr, Škoda’s head of functionality development, calculations and series care. “When we run a physical crash test, all we can do is evaluate the results and data from pre-installed sensors. We can’t install sensors everywhere, though. If, after evaluating a particular crash test, we decide to test other parameters, then we would have to destroy another car. With supercomputing, we just change the input data and repeat the test.”

Predictive accuracy

As computing power moves on, material testing and calibration is becoming far more accurate. “Accuracy

is not a question anymore,” says Schramm. “All the simulations are predictive. We can predict exactly what will happen in the physical test. We repeat that test in the simulation environment and can change sheet metal thickness in a keystroke. It’s prohibitive to do that with a physical prototype. An important part of any crash simulation is accurately modeling the material and its failure. A crash happens in 100 milliseconds, but it’s a sequential event and parts fail as the crash goes through the car. If a rivet would fail in a physical car, then it should fail at the same time in the simulation.”

Jimenez notes that the test’s accuracy depends on information fed into the simulations. “You need to give data to the solver; the most important is the materials’ mechanical behavior. Having a good database of this material characterization means the level of accuracy is very good. So good, in fact, that development decisions can be made taking only the simulations into account.”

“Simulation technology gives you the ability to learn about your design, to understand scatter, and develop things to make decisions,” adds Schramm. “The more computing power that you put into the simulation, the better your design outcome is going to be. In the future, we will see more design decisions driven by numerical experimentation and simulation, but physical tests will still be necessary to design new materials with physical principles that can be put into simulation software.”

As powerful as they may be, supercomputer simulations still need a human element for validation. “The benefits of the simulation depend not only on high computing resources, but also on the knowledge engineers have to analyze the data,” Jimenez says.

“A lot of validation is completed using simulation,” adds Schramm. “But whatever the regulator requires

in physical testing, you have to do. The notion that the physical test is the truth and the simulation is

a fake is not a valid assumption – you can make mistakes with your physical test. If there are setup problems or the material variability is too high, a simulation is more reliable than a physical test.”

From a regulatory viewpoint, accuracy is vital, Avery notes: “We have to have absolute confidence that what we test is real, so we want a real finished vehicle. Virtual crash models are not robust enough yet. I don’t think it’s computing power, I think it’s the accuracy of the different elements within the virtual model. They need to be more complicated, and there needs to be a much better understanding of how the variables work. There is a process that we’re going toward, but it’s going to be a really long time before manufacturers produce a virtual model, the regulator signs it off, and the legal and consumer testing bodies agree that it’s ready.”

Cloud confidentiality

Harnessing the power of cloud technology does enable notable benefits such as using fewer in-house resources and the removal of scalability and job size limits, but there are drawbacks. “Cloud computing

is a benefit due to the optimization of the resources and faster results, but it is not heavily used due to the confidentiality of those results,” says Jimenez.

Budget also comes under scrutiny. “The cloud is limited by cost, and it is usually more expensive than an on-premises system,” notes Schramm.

Some of this cost is due to the need for numerous solvers and licenses. “Each OEM has its own preferences related to the simulation solver, so we must have all the licensed solvers available,” explains Jimenez. “Additionally, we need all the dummies and barrier models, which require a yearly license subscription. Cloud computing offers on-demand services, but the license strategy has not yet been developed for on-demand usage.”

“In the future, we will start to see more hybrid environments,” Schramm states. “If a company is developing a vehicle that needs crash computing resources for just six months, compared with an on-site computer and its cooling, power and storage requirements, the cloud is a much better solution in terms of economics.”

As HPC systems become ever more powerful, on-site administration and storage become more important. “You need to condition the computers – sometimes they are water cooled, and that’s a very big issue,” says Schramm. “It’s a big infrastructure. That’s another benefit of the cloud. There are huge data centers and somebody else takes care of the energy management and infrastructure.”

For Jimenez, future developments of the technology and the potential of artificial intelligence systems are something to look forward to. “I am really excited about what will happen with the AI inside the FEM simulations. What we are trying to do is to solve the physics, but with artificial intelligence we’re learning what happens in vehicles in different situations. We are starting to replicate the performance of the car. The first plug-in AI algorithms inside solvers have been developed over the past year – now we need to really understand how we can use them to improve our simulations.”

EDITOR’S NOTE: This article was first published in Automotive Testing Technology International’s sister publication, Crash Test Technology International 2020 – the world’s first and only magazine dedicated to crash test technology and implementation. Supported by the world’s leading crash test equipment manufacturers and service providers, the magazine highlights the latest trends, developments and technological advancements in safety systems testing.