Lucas Garcia, product manager at MathWorks, shares how verification and validation processes for AI-enabled systems can ensure safety in AI applications and how engineers are refining these processes in various industries, including aviation and automotive.

As AI regulation evolves, these efforts contribute to the responsible and transparent deployment of AI technologies in safety-critical systems.

Policymakers worldwide are making concerted moves towards establishing AI regulation and distinct frameworks for its deployment across industries. Late last year, the White House issued an executive order on AI regulation, highlighting the importance of robust verification and validation processes for AI-enabled systems.

This was swiftly followed by the UK establishing the AI Safety Institute, the first government-backed organization focused on advancing artificial intelligence safety in the interests of the public, and in March, the European Union unveiled the AI Act, which mandates that AI companies report and test models to ensure that AI systems meet specified requirements.

With AI therefore increasingly in the crosshairs of governments and regulators, engineers designing AI-enabled systems are under increasing pressure to meet new and evolving specifications, and V&V processes will significantly impact safety-critical systems.

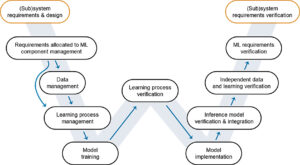

How does verification and validation work in AI-enabled systems?

Verification determines whether an AI model is designed and developed per the specified requirements, whereas validation involves checking whether the product has met the client’s needs and expectations. V&V techniques enable engineers to ensure that the AI model’s outputs meet requirements, enabling swift bug detection and removing the probability of data bias.

One advantage of using AI in safety-critical systems is that AI models can approximate physical systems and validate the design. Engineers simulate entire AI-enabled systems and use the data to test systems in different scenarios. Performing V&V in safety-critical scenarios ensures that an AI-enabled safety-critical system can maintain its performance level under various circumstances.

Most industries that develop AI-enhanced products require engineers to comply with standards before going to market. These certification processes ensure that specific elements are built into these products. Engineers perform V&V to test the functionality of these elements, which makes it easier to obtain certifications.

Deep Learning Toolbox Verification Library and MATLAB Test help engineers stay at the forefront of V&V in industries such as aviation and automotive, by developing software that helps to adhere to these industry standards, streamlining the verification and testing of AI models within larger systems.

V&V AI processes in safety-critical systems

When performing V&V, the engineer’s goal is to ensure that the AI component meets the specified requirements and is reliable under all operating conditions. This is to ensure it is safe and ready for deployment.

V&V processes for AI vary slightly across industries, but there are four overarching steps:

- Analyzing the decision making process to solve the black box problem.

- Testing the model against representative data sets.

- Conducting AI system simulations.

- Ensuring the model operates within acceptable bounds.

The importance of these steps is outlined below, as they continue to be refined and improved as engineers collect new data, gain new insights and integrate operational feedback.

Analyzing the decision making process to solve the black box problem

Engineers can use two methods to solve the common black box problem, a regular occurrence when an AI model is used to add automation to a system.

The first is feature importance analysis, a technique that helps engineers identify which input variables have the biggest impact on a model’s predictions. Although the analysis works differently for different models, the general procedure assigns a feature importance score to each input variable. A higher score signifies that the feature has a greater impact on the model’s decision.

Second, explainability techniques offer insights into the model’s behavior. This is especially relevant when the black box nature of the model prevents the use of other approaches. In the context of images, these techniques identify the regions of an image that contribute the most to the final prediction.

Testing the model against representative data sets

Engineers often evaluate performance in real-world scenarios where the safety-critical system is expected to operate. They gather a wide range of real-world representative data sets suitable for test cases, designed to evaluate various aspects of the model. The model is then applied to the data sets, with the results recorded and compared to the expected output.

Conducting AI system simulations

Simulating an AI-enabled system enables engineers to evaluate the system’s performance in a controlled environment, typically one that mimics a real-world system. Engineers define the inputs and parameters to simulate a system, before executing the simulation using software such as Simulink, which outputs the system’s responses to the proposed scenario.

Ensuring the model operates within acceptable bounds

Engineers employ data bias mitigation and robustification techniques to ensure AI models operate safely and within acceptable bounds.

First, data augmentation ensures fairness and equal treatment of different classes and demographics. In the case of a self-driving car, this may involve using pictures of pedestrians to help the model detect a pedestrian, regardless of their positioning. Data balancing is often paired with data augmentation, and includes similar samples from each data class. Using the pedestrian example, this means ensuring the data set contains a proportionate number of images for each variation of pedestrian scenarios. This technique minimizes bias and improves the model’s generalization ability across real-world situations.

Robustness is a concern when deploying neural networks in safety-critical situations. Neural networks are susceptible to misclassification due to small, imperceptible changes which can cause a neural network to produce incorrect or dangerous results. Integrating formal methods into the development and validation process is a common solution. They involve using rigorous mathematical models to establish and prove the correctness properties of neural networks which ensure higher robustness and reliability in safety-critical applications.

Conclusion

As engineers continue to use AI to aid their V&V processes, it is essential to continue exploring a variety of testing approaches that address the increasingly complex challenges of AI technologies. In safety-critical systems, these efforts ensure AI is used safely and transparently. Increasing attention from policymakers on how AI is deployed across industries will make the adoption of technologies more challenging, but ultimately, it is likely to ensure a more responsible deployment.